Anh Nguyen, Galen Pogoncheff, Ban Xuan Dong, Nam Bui, Hoang Truong, Nhat Pham, Linh Nguyen, Hoang Nguyen-Huu, Khue Bui-Diem, Quan Vu-Tran-Thien, Sy Duong-Quy, Sangtae Ha, and Tam Vu

Abstract

Difficulty falling asleep is one of the typical insomnia symptoms. However, intervention therapies available nowadays, ranging from pharmaceutical to hi-tech tailored solutions, remain ineffective due to their lack of precise real-time sleep tracking, in-time feedback on the therapies, and an ability to keep people asleep during the night. This project aims to enhance the efficacy of such an intervention by proposing a novel sleep aid system that can sense multiple physiological signals continuously and simultaneously control auditory stimulation to evoke appropriate brain responses for fast sleep promotion. The system, a lightweight, comfortable, and user-friendly headband, employs a comprehensive set of algorithms and dedicated own-designed audio stimuli. Compared to the gold-standard device in 883 sleep studies on 377 subjects, the proposed system achieves (1) a strong correlation (0.89 ± 0.03) between the physiological signals acquired by ours and those from the gold-standard PSG, (2) an 87.8% agreement on automatic sleep scoring with the consensus scored by sleep technicians, and (3) a successful non-pharmacological real-time stimulation to shorten the duration of sleep falling by 24.1 min. Conclusively, our solution exceeds existing ones in promoting fast falling asleep, tracking sleep state accurately, and achieving high social acceptance through a reliable large-scale evaluation.

Anh Nguyen, Raghda Alqurashi, Zohreh Raghebi, Farnoush Banaei-kashani, Ann C. Halbower, and Tam Vu

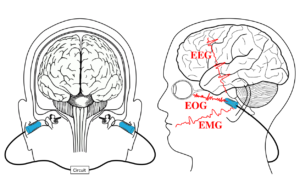

Abstract

This project introduces LIBS, a light-weight and inexpensive wearable sensing system, that can capture electrical activities of human brain, eyes, and facial muscles with two pairs of custom-built flexible electrodes each of which is embedded on an off-the-shelf foam earplug. A supervised nonnegative matrix factorization algorithm to adaptively analyze and extract these bioelectrical signals from a single mixed in-ear channel collected by the sensor is also proposed. While LIBS can enable a wide class of low-cost selfcare, human computer interaction, and health monitoring applications, we demonstrate its medical potential by developing an autonomous whole-night sleep staging system utilizing LIBS’s outputs. We constructed a hardware prototype from off-the-shelf electronic components and used it to conduct 38 hours of sleep studies on 8 participants over a period of 30 days. Our evaluation results show that LIBS can monitor biosignals representing brain activities, eye movements, and muscle contractions with excellent fidelity such that it can be used for sleep stage classification with an average of more than 95% accuracy.

Hoang Truong, Nam Bui, Zohreh Raghebi, Marta Ceko, Nhat Pham, Phuc Nguyen, Anh Nguyen, Taeho Kim, Katrina Siegfried, Evan Stene, Taylor Tvrdy, Logan Weinman, Thomas Payne, Devin Burke, Thang Dinh, Sidney D’Mello, Farnoush Banaei-Kashani, Tor Wager, Pavel Goldstein, and Tam Vu

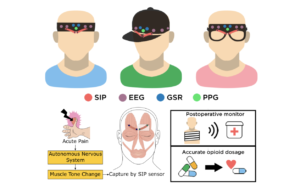

Abstract

Over 50 million people undergo surgeries each year in the United States, with over 70% of them filling opioid prescriptions within one week of the surgery. Due to the highly addictive nature of these opiates, a post-surgical window is a crucial time for pain management to ensure accurate prescription of opioids. Drug prescription nowadays relies primarily on self-reported pain levels to determine the frequency and dosage of pain drug. Patient pain self-reports are, however, influenced by subjective pain tolerance, memories of past painful episodes, current context, and the patient’s integrity in reporting their pain level. Therefore, objective measures of pain are needed to better inform pain management. This project explores a wearable system, named Painometry, which objectively quantifies users’ pain perception based-on multiple physiological signals and facial expressions of pain. We propose a sensing technique, called sweep impedance pro!ling (SIP), to capture the movement of the facial muscle corrugator supercilii, one of the important physiological expressions of pain. We deploy SIP together with other biosignals, including electroencephalography (EEG), photoplethysmogram (PPG), and galvanic skin response (GSR) for pain quantification. From the anatomical and physiological correlations of pain with these signals, we designed Painometry, a multimodality sensing system, which can accurately quantify different levels of pain safely. We prototyped Painometry by building a custom hardware, firmware, and associated software. Our evaluations use the prototype on 23 subjects, which corresponds to 8832 data points from 276 minutes of an IRB-approved experimental pain-inducing protocol. Using leave-one-out cross-validation to estimate performance on unseen data shows 89.5% and 76.7% accuracy of quantification under 3 and 4 pain states, respectively.

Nam Bui, Nhat Pham, Jessica Jacqueline Barnitz, Phuc Nguyen, Hoang Truong, Taeho Kim, Anh Nguyen, Zhanan Zou, Nicholas Farrow, Jianliang Xiao, Robin Deterding, Thang Dinh, and Tam Vu

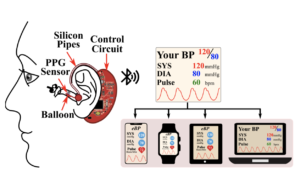

Abstract

This is the abstract contentFrequent blood pressure (BP) assessment is key to the diagnosis and treatment of many severe diseases, such as heart failure, kidney failure, hypertension, and hemodialysis. Current “gold-standard” BP measurement techniques require the complete blockage of blood blow, which causes discomfort and disruption to normal activity when the assessment is done repetitively and frequently. Unfortunately, patients with hypertension or hemodialysis often have to get their BP measured every 15 minutes for a duration of 4-5 hours or more. The discomfort of wearing a cumbersome and limited mobility device affects their normal activities. In this work, we propose a device called eBP to measure BP from inside the user’s ear aiming to minimize the measurement’s impact on users’ normal activities while maximizing its comfort level. eBP has 3 key components: (1) a light-based pulse sensor attached on an inflatable pipe that goes inside the ear, (2) a digital air pump with a !ne controller, and (3) a BP estimation algorithm. In contrast to existing devices, eBP introduces a novel technique that eliminates the need to block the blood blow inside the ear, which alleviates the user’s discomfort. We prototyped eBP custom hardware and software and evaluated the system through a comparative study on 35 subjects. The study shows that eBP obtains the average error of 1.8 mmHg and -3.1 mmHg and a standard deviation error of 7.2 mmHg and 7.9 mmHg for systolic (high-pressure value) and diastolic (low-pressure value), respectively. These errors are around the acceptable margins regulated by the FDA’s AAMI protocol, which allows mean errors of up to 5 mmHg and a standard deviation of up to 8 mmHg.

Phuc Nguyen, Hoang Truong, Mahesh Ravindranathan, Anh Nguyen, Richard Han, and Tam Vu

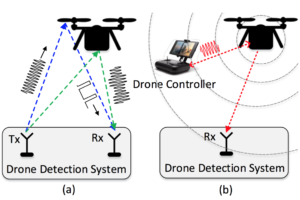

Abstract

Drones are increasingly flying in sensitive airspace where their presence may cause harm, such as near airports, forest fires, large crowded events, secure buildings, and even jails. This problem is likely to expand given the rapid proliferation of drones for commerce, monitoring, recreation, and other applications. A cost-effective detection system is needed to warn of the presence of drones in such cases. In this project, we explore the feasibility of inexpensive RF-based detection of the presence of drones. We examine whether physical characteristics of the drone, such as body vibration and body shifting, can be detected in the wireless signal transmitted by drones during communication. We consider whether the received drone signals are uniquely differentiated from other mobile wireless phenomena such as cars equipped with WiFi or humans carrying a mobile phone. The sensitivity of detection at distances of hundreds of meters as well as the accuracy of the overall detection system are evaluated using software defined radio (SDR) implementation.

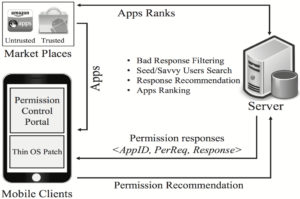

Bahman Rashidi, Carol Fung, Anh Nguyen, Tam Vu, and Elisa Bertino

Abstract

In current Android architecture, users have to decide whether an app is safe to use or not. Technical-savvy users can make correct decisions to avoid unnecessary privacy breach. However, most users may have difficulty to make correct decisions. DroidNet is an Android permission recommendation framework based on crowdsourcing. In this framework, DroidNet runs new apps under probation mode without granting their permission requests up-front. It provides recommendations on whether to accept or reject the permission requests based on decisions from peer expert users. To seek expert users, we propose an expertise rating algorithm using transitional Bayesian inference model. The recommendation is based on the aggregated expert responses and its confidence level. Our evaluation results demonstrate that given sufficient number of experts in the network, DroidNet can provide accurate recommendations and cover majority of app requests given a small coverage from a small set of initial experts.

Anh Nguyen, Raghda Alqurashi, Ann C. Halbower, and Tam Vu

Abstract

It has been medically proven that one’s sleep quality directly affects his or her personal health, social behavior, and work effectiveness. Understanding sleep quality, hence, is an important topic and has been attracting a vast amount of research efforts. In addition, with the advent and ever-increasing number of mobile and wearable devices, many attempts have been made towards monitoring one’s sleep using these ubiquitous devices. However, there were none on exploring the complex relationship between sleep quality and sleeping environment. In this project, we propose mSleepWatcher as an on-going attempt answer the question of "Why didn’t I sleep well?". By mining the environmental sensing information collected by built-in sensors of off-the-shelf mobile and wearable devices in combination with the sleep quality sensing information, a set of causality analysis techniques is adopted and applied to exploit the existence of temporal dependencies between the environment during sleep and sleep quality. Resulted from mSleepWatcher system, latent relationships between the environment and sleep quality can be inferred which are then used to provide recommendation to users to suggest users adjusting their sleep environment for a better sleep. The proposed system is the first attempt to bring a fresh picture of sleep study associated with different scenarios of environmental variations. Derived from our preliminary work, both strength and limitations for developing the complete system in mobile devices are discussed in detail.

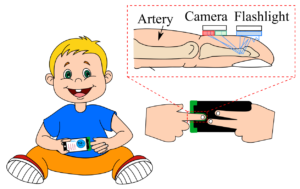

Nam Bui, Anh Nguyen, Phuc Nguyen, Hoang Truong, Ashwin Ashok, Thang Dinh, Robin Deterding, and Tam Vu

Abstract

Accurately measuring and monitoring patient's blood oxygen level plays a critical role in today’s clinical diagnosis and healthcare practices. Existing techniques however either require a dedicated hardware or produce inaccurate measurements. To fill in this gap, we propose a phone-based oxygen level estimation system, called PhO2, using camera and flashlight functions that are readily available on today’s of-the-shelf smart phones. Since phone’s camera and flashlight are not made for this purpose, utilizing them for oxygen level estimation poses many challenges. We introduce a cost-effective add-on together with a set of algorithms for spatial and spectral optical signal modulation to amplify the optical signal of interest while minimizing noise. A light-based pressure detection algorithm and feedback mechanism are also proposed to mitigate the negative impacts of user’s behavior during the measurement. We also derive a non-linear referencing model that allows PhO2 to estimate the oxygen level from color intensity ratios produced by smartphone’s camera. An evaluation using a custom-built optical element on COTS smartphone with 6 subjects shows that PhO2 can estimate the oxygen saturation within 3.5% error rate comparing to FDA-approved gold standard pulse oximetry. A user study to gauge the reception of PhO2 shows that users are comfortable self-operating the device, and willing to carry the device when going out.

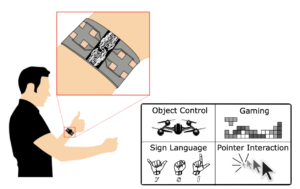

Hoang Truong, Shuo Zhang, Ufuk Muncuk, Phuc Nguyen, Nam Bui, Anh Nguyen, Qin Lv, Kaushik Chowdhury, Thang Dinh, and Tam Vu

Abstract

We present CapBand, a battery-free hand gesture recognition wearable in the form of a wristband. The key challenges in creating such a system are (1) to sense useful hand gestures at ultra-low power so that the device can be powered by the limited energy harvestable from the surrounding environment and (2) to make the system work reliably without requiring training every time a user puts on the wristband. We present successive capacitance sensing, an ultra-low power sensing technique, to capture small skin deformations due to muscle and tendon movements on the user’s wrist, which corresponds to specific groups of wrist muscles representing the gestures being performed. We build a wrist muscles-to-gesture model, based on which we develop a hand gesture classi!cation method using both motion and static features. To eliminate the need for per-usage training, we propose a kernel-based on-wrist localization technique to detect the CapBand’s position on the user’s wrist. We prototype CapBand with a custom-designed capacitance sensor array on two flexible circuits driven by a custom-built electronic board, a heterogeneous material-made, deformable silicone band, and a custom-built energy harvesting and management module. Evaluations on 20 subjects show 95.0% accuracy of gesture recognition when recognizing 15 different hand gestures and 95.3% accuracy of on-wrist localization.

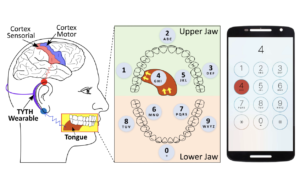

Phuc Nguyen, Nam Bui, Anh Nguyen, Hoang Truong, Abhijit Suresh, Matthew Whitlock, Duy Pham, Thang Dinh, and Tam Vu

Abstract

This project explores a new wearable system, called TYTH, that enables a novel form of human computer interaction based on the relative location and interaction between the user’s tongue and teeth. TYTH allows its user to interact with a computing system by tapping on their teeth. This form of interaction is analogous to using a finger to type on a keypad except that the tongue substitutes for the finger and the teeth for the keyboard. We study the neurological and anatomical structures of the tongue to design TYTH so that the obtrusiveness and social awkwardness caused by the wearable is minimized while maximizing its accuracy and sensing sensitivity. From behind the user’s ears, TYTH senses the brain signals and muscle signals that control tongue movement sent from the brain and captures the miniature skin surface deformation caused by tongue movement. We model the relationship between tongue movement and the signals recorded, from which a tongue localization technique and tongue-teeth tapping detection technique are derived. Through a prototyping implementation and an evaluation with 15 subjects, we show that TYTH can be used as a form of hands-free human computer interaction with 88.61% detection rate and promising adoption rate by users.

Moatassem Abdallah, Caroline Clevenger, Tam Vu, and Anh Nguyen

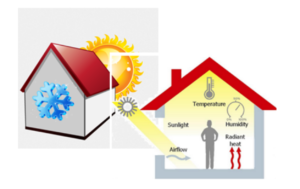

Abstract

Thermal comfort of building occupants is a major criterion in evaluating the performance of building systems. It is also a dominant factor in designing and optimizing building’s operation. However, existing thermal comfort models, such as Finger’s model currently adopted by ASHRAE Standard 55, rely on factors that require bulky and expensive equipment to measure. This project attempts to take a radically different approach towards measuring the thermal comfort of building occupants by leveraging the ever-increasing capacity and capability of mobile and wearable devices. Today’s commercially-off-the-shelf (COST) wearable devices can unobtrusively capture a number of important parameters that may be used to measure thermal comfort of building occupants, including ambient air temperature, relative humidity, skin temperature, perspiration rate, and heart rate. This research evaluates such opportunities by fusing traditional environmental sensing data streams with newly available wearable sensing information. Furthermore, it identifies challenges for using existing wearable devices and to developing new models to predict human thermal comfort. Findings from this exploratory study identify the inaccuracy of sensors in cellphones and wearable as a challenge, yet one which can be improved using customized wearables. The study also suggests there exists a high potential for developing new models to predict human thermal sensation using artificial neural networks and additional factors that can be individually, unobtrusively, and dynamically measured using wearables.